Al5d

Absract

The aim of this project is to try a tower stacking game using Lynxmotion’s AL5D robot arm and a commonly used webcam.

The robot arm use a general USB Webcam to ascertain information such as the position, color and distance of blocks and dice. Also, I use monocular depth estimation method to ascertain distance instead of using a depth camera for saving budget.

Table of Contents

- INTRODUCTION

- PRELIMINARIES

- PLAYING A BLOCK STACKING GAME USING AFFORDABLE MANIPULATOR AND CAMERA

- EXPERIMENTAL VALIDATION

- DISCUSSION

- CONCLUSION

- CODE FOR PAPER

- REFERENCE

INTRODUCTION

PRELIMINARIES

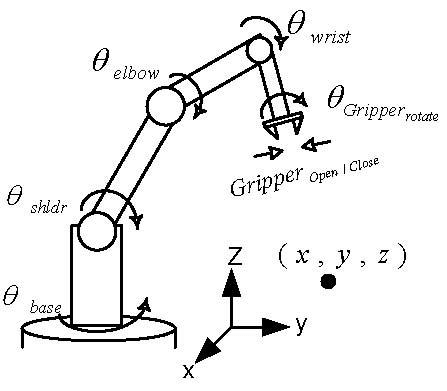

Hardware Description

Robot Arm Structure

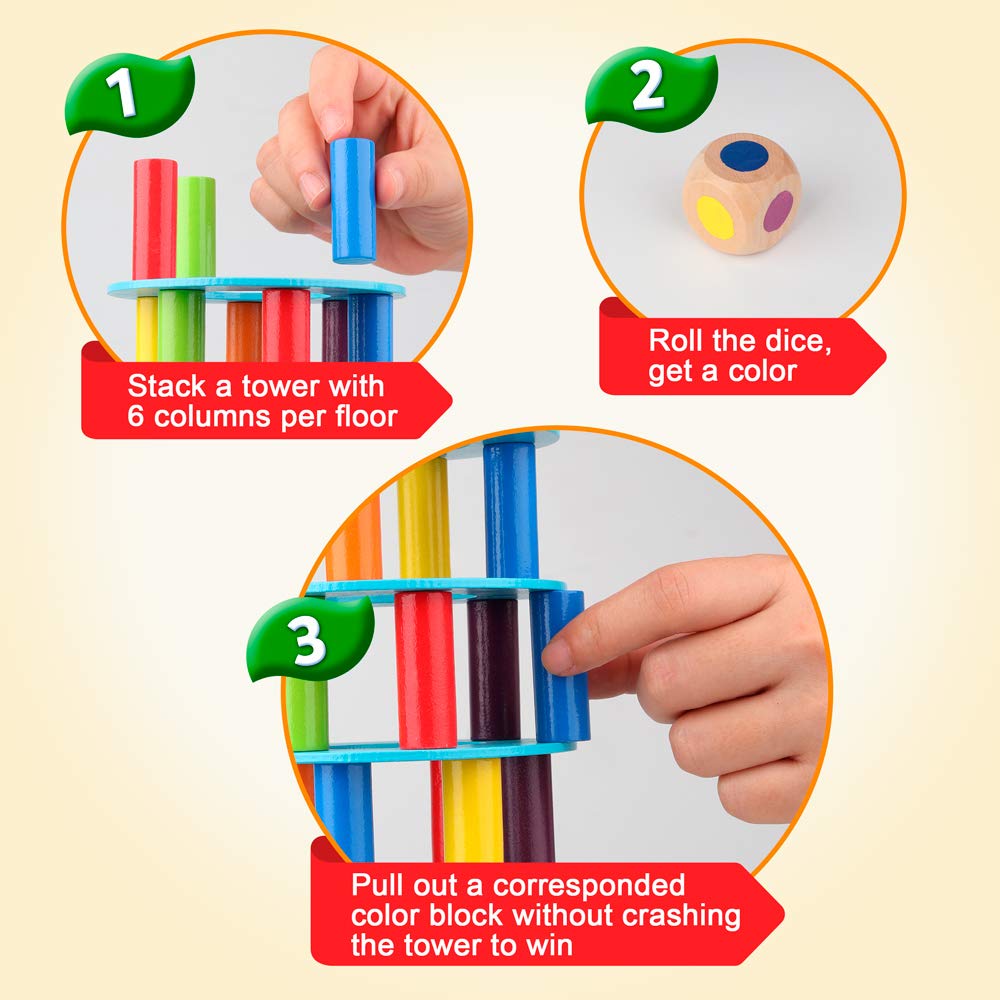

Tower Game Rule

Object detection and segmentation

The robot must detect the blocks in the picutre. Even when multiple blocks overlap, each block must be detected. Thus, I decide to use a Mask R-CNN that has a segmentaion function in addition to detection.

Link of dataset for Mask R-CNN

For this, I create a data set by arranging actual block, dice, base plate of tower game at various angles and taking pictures. Thereafter, labeling is performed using a VGG Image Annotator.

After creating a data set, I train it on Mask R-CNN and confirm the prediction results.

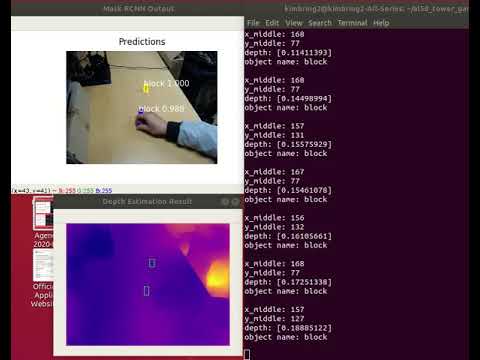

After trarning a single image, I apply Mask R-CNN to the image being streamed from webcam. Also, the 2D information and the depth information of the object are used together as inputs in the Neural Network of robot agent, the output of Mask R-CNN and Monocular Depth Estimation must be displayed on same screen.

As a result of executing while moving the camera at a various of angles, it is determined that detection and segmentation are not completely performed. Perhaps I need more training data.

In order to apply Reinforcement Learning, a reward must be given when the robot arm reaches a specific place. In addition, the gripper part of the robot arm must also be recognized by Mask R-CNN.

PLAYING A BLOCK STACKING GAME USING AFFORDABLE MANIPULATOR AND CAMERA

EXPERIMENTAL VALIDATION

DISCUSSION

CONCLUSION

CODE FOR PAPER

REFERENCE

- Deisenroth, Marc & Rasmussen, Carl & Fox, Dieter. (2011). Learning to Control a Low-Cost Manipulator using Data-Efficient Reinforcement Learning. 10.15607/RSS.2011.VII.008.

- Alhashim, I., & Wonka, P. (2018). High Quality Monocular Depth Estimation via Transfer Learning. arXiv preprint arXiv:1812.11941.

- Waleed Abdulla. (2018). Mask R-CNN for object detection and instance segmentation on Keras and TensorFlow. GitHub repository